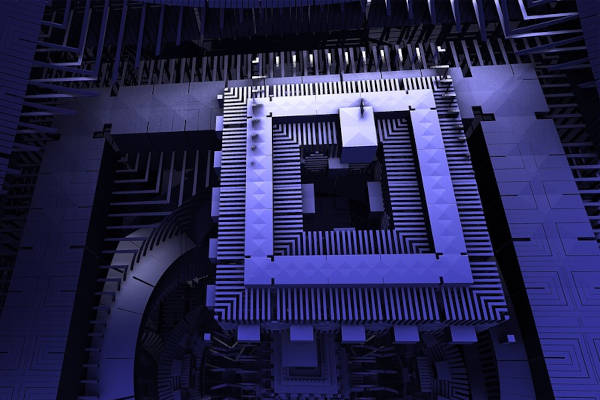

To understand Quantum Computing a definition is necessary. Think of Quantum Computing as problem solving simultaneously at once and is essentially defined as:

“In the current architecture of a CPU, we have bits that are either one or zero. In a quantum computer, instead of bits we have qubits that exist in both states—one and zero—and everything in between, at the same time. So, when we do calculations, we can have all possible solutions to a problem being considered at once. The key thing is it can be much, much faster than current architectures. Also, it means we can do certain calculations which just are not practical using current computers.”

Although Quantum Computing models are for the most part considered “very simplistic”, there are proof-of-concept systems that actually been made to work in private-sector organizations. The only barrier is that “they are not at a size which is adequate to calculate the prime factors in encryption.” It is quite possible that nation states are currently working on refining Quantum Computing to the point of workability.

Quantum Computing is considered s security threat due to its calculating prime numbers in large numbers easily, whereas typical computing takes a longer time:

“When somebody creates a quantum computer of a suitable size, they will be able to crack many of our current secure encryption algorithms very easily. This is going to be a massive change for the way we keep data secure through encryption.”

Since there are algorithms believed to be ‘quantum insecure’ (ones that rely on public key cryptography), the National Institute of Standards and Technology in the United States is currently conducting standardization work to deduce quantum secure algorithms:

“Also, it is expected that keys of greater than 3,072 bits are going to be quantum secure for the foreseeable future, so we have an upgrade pathway. But we need to be developing systems so that we can swap out encryption algorithms as and when necessary.”