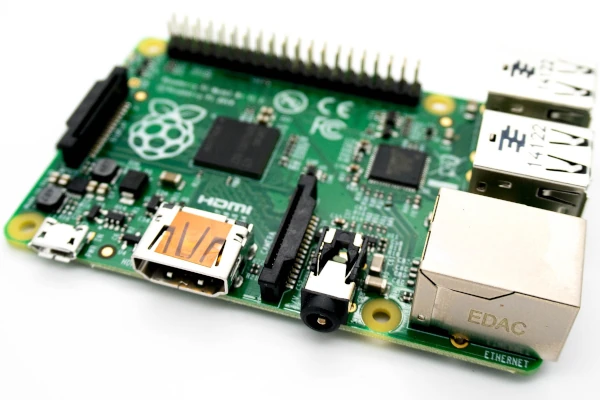

Specifically designed for compute-intensive AI workloads, IBM’s new POWER9 systems increases deep learning frameworks training times by 4x “allowing enterprises to build more accurate AI applications, faster.”

Using the PCI-Express 4.0, next-generation NVIDIA NVLink and OpenCAPI, the POWER9-based AC922 Power Systems are 9.5x faster than previous systems. Therefore data scientists have the ability to “build applications faster, ranging from deep learning insights in scientific research, real-time fraud detection and credit risk analysis.”

The U.S. Department of Energy’s “Summit” and “Sierra”, the “soon-to-be most powerful data-intensive supercomputers in the world” use POWER9. Google has shown interest in POWER9, especially

“the POWER9 OpenCAPI Bus and large memory capabilities [which] allow for further opportunities for innovation in Google data centers.”

Deep learning is defined as,

“a fast growing machine learning method that extracts information by crunching through millions of processes and data to detect and rank the most important aspects of the data.”

PowerAI, allows data scientists to deploy deep learning frameworks and libraries on the Power architecture in a short time. IBM has already reduced the learning curve for “deep learning” by introducing the PowerAI Distributed Deep Learning toolkit.

An open ecosystem is necessary for companies to deliver technologies and tools related to AI. IBM is essentially leading the way for “innovation to thrive, fueling an open, fast-growing community of more than 300 OpenPOWER Foundation and OpenCAPI Consortium members.”