What are Large Language Models or LLMs? Large Language Models are deep learning algorithms pretrained on large datasets and can recognize, summarize, translate, predict and generate text and various content:

While the first LLMs dealt solely with text, later iterations were trained on other types of data. These multimodal LLMs can recognize and generate images, audio, videos and other content forms.

LLMs are used in a variety of practical applications such as language translation apps like DeepL, medical research, customer support experiences and financial analysts predictions.

Over the years advances in multimodal LLMs such as a chatbot’s ability in analyzing and generating imagery and AI enhanced LLMs to solve complex problems and interact with other LLM systems.

A more robust LLM takes chatbots to a new level. Enter Retrieval-augmented generation or RAG. RAG is an efficient route LLMs for a particular dataset and works with any LLM:

RAG enhances the accuracy and reliability of generative AI models with facts fetched from external sources. By connecting an LLM with practically any external resource, RAG lets users chat with data repositories while also giving the LLM the ability to cite its sources. The user experience is as simple as pointing the chatbot toward a file or directory.

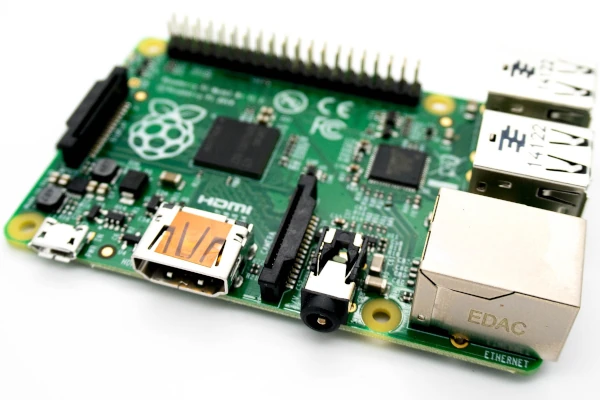

Running on a a GeForce RTX or NVIDIA RTX professional GPU, NVIDIA’s Chat with RTX tech demo demonstrates RAG connecting an LLM to a personal dataset and is built with RAG and TensorRT-LLM and RTX acceleration:

Users can easily connect local files on a PC to a supported LLM simply by dropping files into a folder and pointing the demo to that location. Doing so enables it to answer queries with quick, contextually relevant answers.

Rather than transferring critical and private data to the cloud user data is processed and stored on a local PC with out third-parties or internet connctions.

For more groundbreaking AI news tune into NVIDIA GTC, a global AI developer conference running March 18-21 in San Jose, Calif., and online.